Greetings Razzball Nation. Unlike that lazy Pennsylvanian rodent, I’ve arisen from my winter bunker a month earlier than usual to recap what turned out to be a memorable Year 2 in the life of the Razzball Pigskinonator aka my first robot child with my second android wife (I had the MLB Streamonator and Hittertron with Wife #1, NBA Stocktonator with Wife #3. While Jewish by birth, my Android love is Mormonesque.).

As many of you know, FantasyPros offers a platform where “experts” rank fantasy football players by position and then FantasyPros has a methodology for ranking the accuracy of said experts. They have been operating in since 2009 and our lead Razzball writers (Chet aka Doc, Sky, Jay) have been participating since 2010 with two top-10 finishes (Doc #6 in 2010, Jay #9 in 2016). The contest typically has 100-120 participants from various fantasy football publications/blogs. I am no expert of fantasy experts but seems like the only notable absences in the 2017 bunch are:

- Writers/publications with a big enough NFL customer/readership base that they do not need the extra exposure (e.g., ESPN, NFL Network/NFL.com, Footballguys.com).

- DFS Touts that, by and large, do not typically expose their rankings to

third-party scrutinynon-paying customers.

I threw a newborn Pigskinonator into the FantasyPros’ shark-infested waters in 2016 and, well, it was a learning experience. I stuck to the rankings from the ‘bot – using projections that veered wildly from consensus as opportunities to dig into the code and test the underlying assumptions. I finished in the bottom 50% and found my model was consistently one of the most ‘unique’ in terms of different from the overall consensus.

I use the term ‘unique’ vs ‘bold’ for two reasons:

- Boldness signifies intent. I am not trying to be different. My brain works a certain way and that influences how I build my projection model. The only thing remotely bold about my method is that I can give 1.97 shits how the consensus ranks a player. But my only true intent is to project as accurately as possible.

- The fantasy community has co-opted ‘bold’ to mean “Take a wild-ass guess and claim credit if you’re right”.

So my two goals for 2017 were to improve the accuracy of my model and, hopefully, retain as much uniqueness as possible. For the sake of indulging my pathological need to break up paragraphs with bulleted and numbered points, here is how I would categorize expert advice. My goal for 2017 was to move from #3 to either #1 or #2.

1) Unconventional Wisdom – Accurate + Unique vs Consensus

2) Conventional Wisdom – Accurate + Not Unique vs. Consensus

3) Unsuccessful Boldness – Inaccurate + Unique vs. Consensus

Goal #1 – Improve Accuracy.

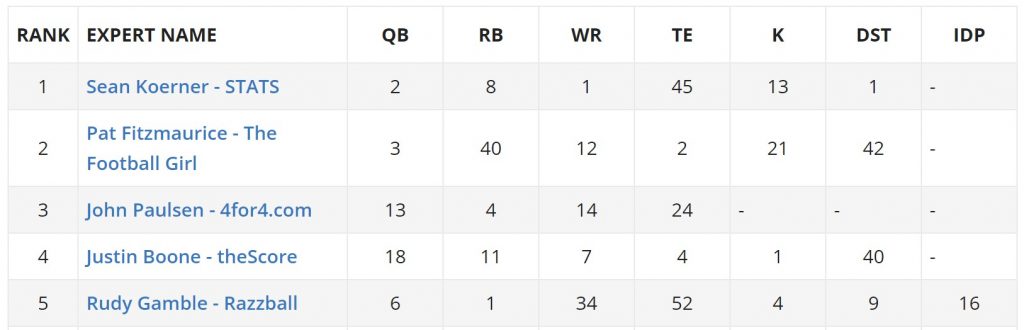

Goal accomplished. I nabbed Razzball’s first top 5 finish and tied the #1 finisher (three-peater Sean Koerner of STATS) with 4 positional top 10’s.

(Note: Our lead writer – Jason “JayWrong” Longfellow finished #23 – his third straight top 20% finish!)

One interesting (to me at least) note on my season is that I was around 70th after Week 6. I started strong in Weeks 1-3 but hit a valley in Weeks 4-6. From Week 7 through Week 16, I was the most accurate expert with four #1 finishes in the final 8 weeks (Only two other experts – #2 finisher Pat Fitzmaurice and #10 Bill Enright of FFChamps – had multiple #1 finishes in 2017. Both had two).

I cannot explain the sudden turnaround. It wasn’t like I dug in the code between Week 6 and Week 7 and found a massive error. I will selfishly credit it to finally getting ‘over the hump’ after 22 weeks of (Walt Frazier voice) fiddling and middling.

Goal #2 – Retain Uniqueness

Before I dig into the results, I will provide a 101 on the value of ‘uniqueness’ and how it is reflected in the FantasyPros Accuracy contest.

In my opinion, the value of ‘uniqueness’ in ranking/projecting fantasy players is either 1a or a close 2nd to accuracy’s #1. Being able to identify ‘breakout’ candidates for a week with some level of consistency has huge value for season-long (finding guys on waiver wire) and DFS (non-‘chalk’ / low ownership, high value players).

Alas, the current iteration of FantasyPros Accuracy contest does not measure/value this variable. If anything, the methodology has a penalty for being unique. This is a bit of a rabbit hole so I will center and italicize in case you want to skip it.

The FantasyPros methodology compares the actual vs projected values for three sets of overlapping player universes (the X varies by position category – QB/RB/WR/TE) – 1) Players who actually finished in top X for points that week, 2) Players who each expert ranked in top X, and 3) Players who the consensus ranked in top X. Each player an expert is graded against is based on the absolute difference between actual and projected points. Since the ‘winner’ is the one with the least points, each player one is graded against is like an additional hole in golf.

Groups #1 and #2 create a level playing field for all participants. More accurate rankers (those who had more of the top X players in their rankings) are graded on less players. Player group #3, however, only adds players who both did not finish in the top X AND were not in the expert’s top X. Since more ‘unique’ rankings are more likely to have players not in the consensus rankings, ‘unique’ rankers are more likely to have players in that #3 category that, going back to the golf metaphor, means having to play an extra hole or two. Even if you hit a hole-in-one, that still hurts your overall score.

The impact of this penalty is mild for all positions save for IDP but my rough guess is that it could be in upwards of 20 ranking spots depending on where you rank. For IDP, where general scoring randomness makes the consensus picks less informed/predictive, this penalty is crippling. While I have not focused a lot on IDP in 2017, the fact I was the most unique IDP ranker AND finished top 10 in team DST and yet finished last just about week for 16 weeks in IDP leads me to believe there is a healthy correlation.

(Note: The quickest/easiest remedy for that is to remove player group #3 – if an expert ranks a player outside the top X AND is correct, the expert should not be graded on the player)

While FantasyPros does not factor in ‘uniqueness’ nor displays any related metric on their public site, they DO have a valuable measure in their expert portal called ‘ECR Differential’ which sums the absolute difference between an expert’s player rank and the consensus rank. So a score of 1.00 for QB would mean an expert’s average QB rank was +/- 1 away from the consensus rank. Zero would reflect the expert just mirrored the consensus. The further an expert is away from zero, the more ‘unique’ their rankings.

The below is a scattergram showing the accuracy and uniqueness of the 97 experts that published rankings in all 16 weeks. I converted the FantasyPros accuracy scores and average weekly ECR differential scores into standard deviations so they are on the same scale and it is easy to determine above vs below average.

As with just about every X/Y access graph, the top right quadrant is the best place to be (“Unconventional Wisdom”) and bottom left quadrant is the worst place (Unsuccessful & Conventional?). Bottom right (“Conventional Wisdom”) is better than top left which reflects “Unsuccessful Boldness”.

A couple of key points:

- There is a fairly significant negative correlation between ‘accurate’ and ‘unique’. If the stat geeky r^2 of -.308 means nothing to you, you can see that most of the dots appear in the quadrants where Accuracy and Uniqueness values are different: top left (Unique but Inaccurate) and bottom right (Accurate but not Unique). I believe the uniqueness penalty noted above plays some role in this correlation.

- It is near impossible with the naked eye to distinguish the Accuracy difference between experts who are ranked close to one another. To underscore how close spots 2-10 are, I believe that if Trevor Siemian did not get injured in the Week 15 Broncos-Colts game and put up a performance remotely close to universally-panned backup Brock Osweiler, I would be in 2nd place. Conversely, if Blake Bortles or Alex Smith got hurt in Week 13, I finish around 10th).

- Two data points stand out to me:

- Point A is in the bottom right quadrant at about 2 standard deviations above average on Accuracy and about 0.5 standard deviations below average on Uniqueness.

- Point B is in the top right quadrant at ~1.5 standard deviations on Accuracy and just above 3 standard deviations above average on Uniqueness.

Point A = The Accuracy champ Sean Koerner whose ~0.6 standard deviation difference from 2nd place was the same margin between 2nd place and 26th place!

Point B = The dude writing this post who is nearly 2 standard deviations more unique than the next expert (Staff Rankings for DailyRoto.com) who was above average in Accuracy. (FantasyPros noted something similar in their year-end recap.)

Below is the same scattergram but limited to the top 50 Accuracy finishers. This removes a number of “Unsuccessfully Bold” experts and turns the relationship between Accuracy and Unique to basically zero. Sean is now nearly 3 standard deviations more accurate than the average in this group and I am 4.5 standard deviations above average on uniqueness. In a normal distribution of results, 99.9% of results should falls +/- 3 standard deviations from average so these results are quite abnormal.

Net-net, Goal #2 of retaining uniqueness in my rankings was definitely accomplished.

Final aside: I cannot say for sure why my rankings are so unique vs the consensus but one thing I have observed is that the range of rankings across the more accurate experts are absurdly close. If a player is consensus RB20, it is not surprising that almost all the top experts have him between RB19-21. One explanation could be that everyone has developed similar models/tendencies/biases. An alternate, non-exclusive explanation is that the default rankings in the FantasyPros Expert Portal when one begins their weekly rankings are the consensus rankings. Since many experts manually adjusting up/down, the initial value has a gravitation pull so someone thinking “He’s RB10” might second-guess and hedge their bets with a ranking closer to RB20. Additionally, some rankers may not have a POV on a certain player and just leave him unadjusted. It would be a fascinating experiment for rankers to rank blind to consensus and see what the impact would be on the range of ranks. FWIW, I manually cut/paste my rankings into FantasyPros’ import tool and then later review the deltas vs consensus to determine if there are any players I want to use as excuses to dig into the code or reconsider role/playing time. Usually it just helps me identify who I am rooting for/against on a given Sunday like these Week 13 QB picks…

— (((Rudy Gamble))) (@rudygamble) December 3, 2017

Conclusion

Apologies that the above and subsequent text reads braggy but, as (a Google search for the quote informed me) Will Rogers once said, “It ain’t bragging if it’s true.” So if I didn’t write it, I would be hiding the truth and that is a bad thing, right?.

In 2018, the only goal left to accomplish is to finish #1 in FantasyPros Accuracy and Uniqueness (amongst top 50). If you asked me in September whether this was possible, I would have said no way. But if I could finish top in accuracy from Weeks 7-16 while being super-unique, it’s possible to do for Weeks 1-16.

If you signed up for my NFL projections in 2017, thanks for having faith! Hope you did well and hope to see you again next year! (And check out our NBA projections if you play….)

If you didn’t sign up, I kindly suggest jumping on board in 2018. If you play DFS, we will give you free access for Week 17 + the playoffs if you sign up for our NBA DFS projections! (just shoot me an e-mail after signing up)